Where Should Your Crypto App Live? Balancing Speed, Privacy, and Cost in 2025

Last month, in a sunlit coworking space, our blockchain dashboard froze for a heartbeat. The bug wasn’t in our smart contracts or our backend logic; it was in where the data lived. The RPC service we depended on flitted between regions, and the latency spike turned a smooth user experience into a moment of hesitation. In that quiet pause, a brutal question surfaced: where should this app actually live? In one cloud region, behind a private network, or stitched together with decentralized data paths that you don’t control? The answer isn’t simply “cloud good, decentralized bad.” It’s about balance—between speed, cost, and governance, and about building an architecture that your team can actually operate, day in and day out.

What’s changed in hosting for blockchain apps by late 2025 isn’t a single new technology, but a shift in mindset. Fully managed node services on mainstream clouds promise reliability with less ops toil. Pay-as-you-go RPC pricing introduces cost flexibility as traffic scales. Decentralized RPCs and DePIN networks offer a way to diversify risk and tap into community-governed data layers. And privacy-preserving approaches—zero-knowledge channels and confidential computing—are no longer experiments but practical options for regulated industries. I’ve seen teams move from chasing the newest gadget to designing blends: core workloads on trusted cloud nodes, supplemented by resilient, decentralized data paths for redundancy and broader ecosystem risk diversification.

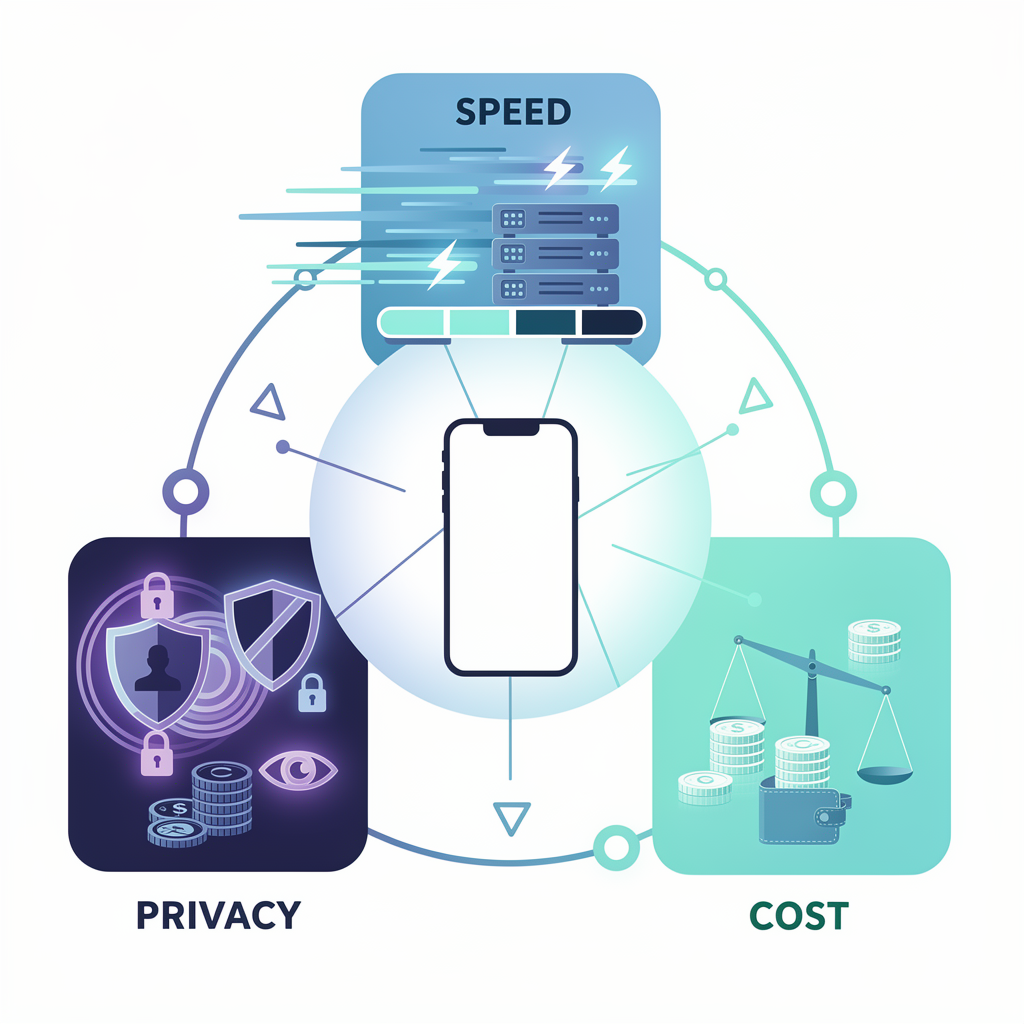

This piece doesn’t pretend to hand you a single universal blueprint. Instead, it invites you to think through hosting as a set of choices that you tailor to your product’s needs, budgets, and governance constraints. They’re not mutually exclusive; they’re collaborative. The right mix depends on what you’re willing to trade off: latency vs. diversity of data sources, predictable pricing vs. appetite for operational complexity, and the level of privacy you must guarantee for your users.

What I learned, and what you’ll see echoed in the latest industry chatter, is that the best path isn’t a choice you make once—it’s a continuum you continuously adjust. The major cloud players are making it easier to run production-grade nodes with regional options and robust security features. Google Cloud’s Blockchain Node Engine, for example, supports fully managed Ethereum nodes with regional placements, TLS endpoints, and protection against DDoS, with transparent pricing by node type and deployment region. On the cloud provider side, AWS highlights private subnets and KMS integration for scalable, secure node operations. This isn’t merely about uptime; it’s about decreasing the cognitive load of running a crypto API so you can focus on product differentiation rather than ops wrangles.

Meanwhile, pricing is loosening its old rigidness. Alchemy’s Pay As You Go model (effective early 2025) eliminates minimum commitments and introduces volume-based discounts, aiming to make production workloads more cost-predictable as traffic grows. Infura has followed with a credits-based pricing framework, offering tiers that scale with demand and enterprise options for auto-scaling and SLAs. For teams mindful of cost, this is a signal that you can start lean and grow without a cliff of surprises when user growth accelerates.

Beyond pure cloud hosting, a broader ecosystem is nudging us toward diversification. Decentralized RPC networks—SubQuery Network and similar initiatives—are building out distributed RPCs and data indexing across ecosystems, letting dApps fetch on-chain data through operators you don’t strictly own. The Graph Foundation’s Sunrise decentralization and ongoing work point to a future where access to on-chain data isn’t solely a single provider’s API, but a permissionless, incentive-driven network of contributors. In parallel, DePIN projects like Render Network and io.net are expanding GPU compute capacity for non-EVM workloads, AI inference, and rendering, creating new options for compute-intensive tasks alongside blockchain workloads.

All of this has a practical, day-to-day implication: your hosting choice should be a deliberate, ongoing design decision rather than a one-time setup. A core illusion to dispel is that “more cloud equals more reliability.” In reality, reliability is a tapestry—tight SLAs, regional resilience, diverse data sources, and clear governance controls. It’s possible to blend managed cloud nodes for core operations with decentralized RPCs as a resilience layer, while experimenting with privacy-preserving channels where regulation requires it. It’s also entirely reasonable to start small, quantify costs and latency, and expand into more complex topologies as your product matures.

So how should you begin this journey? Start by mapping data flows and latency sensitivity. Where do your users feel the most impact when an RPC is slow or a node becomes temporarily unavailable? Which data needs the strongest privacy guarantees, and which can be exposed to broader, permissionless data networks? From there, consider a staged approach:

- Establish a trusted core: run production workloads on managed cloud nodes that you can rely on for uptime and predictable security controls. This gives your developers a solid base and your customers a stable experience.

- Introduce redundancy with decentralized data paths: layer in decentralized RPCs or data indexing networks to reduce single points of failure and to test latency across geographies. This can improve resilience and potentially lower long-term costs at scale.

- Add privacy options where needed: deploy privacy-preserving channels or confidential computing features if you operate in regulated spaces or handle sensitive user data.

- Build cost controls into the architecture: use pay-as-you-go or credits-based models to scale cost with traffic, and implement guardrails to prevent budget overruns during spikes.

As you experiment, keep your decision-making transparent within the team. What surprised you in their outcomes? Which suppliers provided the most reliable support during marginal conditions? And perhaps most importantly, what new questions did your experiments reveal about data sovereignty, governance, and trust in distributed systems?

What would you test first if you could redesign your hosting stack from scratch, with today’s options in mind? Would you prioritize latency, cost predictability, or strict regulatory compliance? If you could design a hosting layer that makes trust almost invisible to users, what would that layer look like—and what would you need to prove to your stakeholders to get there?

Where should your blockchain app actually live? A practical, evolving guide to hosting in 2025

Last month, in a sunlit coworking space, our blockchain dashboard froze for a heartbeat. The bug wasn’t in the smart contracts or the backend logic; it was in where the data lived. The RPC service we depended on drifted between regions, and a latency spike turned a smooth user experience into a moment of hesitation. In that quiet pause, a piercing question surfaced: where should this app actually live?

The truth is not a single seat on a single chair. It’s a balance—between speed, cost, governance, and the kind of resilience your product demands. Hosting blockchain apps today isn’t about choosing one path; it’s about designing a blend that grows with your product, without leaving you hostage to any single provider.

What you’ll find here is a grounded, practical map built from the latest moves in 2025: fully managed node services on mainstream clouds, flexible pay-as-you-go RPC pricing, decentralized RPCs and DePIN compute layers, and privacy-oriented options that are finally practical for regulated contexts. I’ll share concrete steps, real-world trade-offs, and initial experiments you can run now.

If you’re deciding for a fintech, crypto API, or blockchain-enabled service, you’re not alone in asking: how do we cover reliability, latency, cost predictability, and data governance without sacrificing speed or simplicity? The answer isn’t a single product. It’s a carefully staged architecture that evolves with your users and your risk posture.

Recent shifts you should know about include: cloud providers rolling out production-grade node services to reduce ops toil; pricing models moving toward use-based credits and pay-as-you-go plans; a growing ecosystem of decentralized RPCs and data networks that diversify risk; and privacy-preserving techniques becoming standard options for regulated environments. This is not a revolution so much as a re-composition of the stack: core workloads on trusted cloud nodes, complemented by resilient, decentralized data paths, and privacy options where required by policy or business needs. For context and credibility, you’ll find industry developments referenced inline with sources like Google Cloud’s Blockchain Node Engine, Alchemy’s pay-as-you-go model, Infura’s credits-based pricing, SubQuery Network’s decentralized RPCs, The Graph’s Sunrise decentralization, and privacy-focused research and regulations shaping enterprise hosting choices.

The hosting options shaping the landscape

- Fully managed cloud nodes for production workloads

- Google Cloud’s Blockchain Node Engine provides fully managed Ethereum nodes with regional deployment options, TLS endpoints, and Cloud Armor protection. Pricing is transparent by node type and deployment region, designed to reduce ops overhead and improve developer iteration speed. This is a strong base for teams that want predictable reliability without building their own node infrastructure from scratch. [Source: Google Cloud]

-

AWS Managed Blockchain offers scalable, secure node hosting for Hyperledger Fabric and Ethereum, with private subnets and KMS-based security, emphasizing governance, private networking, and ease of integration with existing AWS controls. [Source: AWS]

-

Flexible RPC/API pricing and infrastructure economics

- Alchemy’s Pay As You Go pricing (effective Feb 1, 2025) removes minimum commitments and introduces volume discounts, aiming to align costs with actual usage as traffic grows. Node/API data pricing has also been refreshed to lower rates. This reduces the barrier to production-scale experiments and helps teams forecast costs more accurately. [Source: Alchemy]

-

Infura’s tiered, credits-based pricing supports large-scale apps with auto-scaling options and enterprise SLAs, enabling predictable budgeting for multi-network workloads. [Source: Infura]

-

Decentralized RPCs and data networks for resilience

- SubQuery Network is expanding decentralized RPCs and indexing for many ecosystems, enabling apps to fetch on-chain data through globally distributed operators and reducing reliance on a single centralized API layer. [Source: SubQuery]

-

The Graph Foundation’s Sunrise decentralization illustrates a broader shift to permissionless data networks, where data access is increasingly provided by a network of indexers and delegators rather than a single vendor. [Source: The Graph]

-

DePIN compute networks for off-chain workloads

-

Decentralized GPU compute networks (Render Network, io.net) are maturing to host AI inference, rendering, and other compute-intensive tasks, offering complementary capacity to blockchain workloads without locking you into a single cloud provider. [Source: Render Network; io.net]

-

Privacy, security, and regulatory considerations

-

Enterprise privacy technologies—zero-knowledge proofs and confidential computing—are moving from research to practical deployment, offering private data channels and confidential on/off-chain interactions as options for regulated industries. [Source: ArXiv and industry discussions]

-

Regulatory backdrop and implications for hosting

- The evolving regulatory environment in 2025 shapes how you design data retention, custody controls, and compliance tooling, all of which influence hosting choices and architecture decisions. [Source: Reuters coverage of US regulatory signals]

How to think about the trade-offs (in plain terms)

- Latency vs. reliability: Managed cloud nodes give you strong uptime SLAs and predictable security controls. Decentralized RPCs add resilience and geographic breadth but introduce variability that you’ll need to manage with clever routing and monitoring.

- Costs vs. predictability: Pay-as-you-go and credits-based pricing let you align spend with traffic, but you’ll want guardrails, alerts, and budget reviews to avoid surprises during spikes.

- Governance and control: Centralized cloud nodes give you consistent governance tooling. Decentralized layers offer resilience and openness, but require governance interfaces you and your team can operate—bonding with a community, validators, or indexers takes discipline.

- Privacy needs: If you handle regulated data, you may need private channels or confidential computing. If not, you can experiment with open data paths to accelerate development and reduce vendor lock-in.

The practical upshot is not a single choice but a design that can evolve. You can start with a solid core in a trusted cloud, layer in decentralized paths for resilience, and add privacy controls where required. This enables you to optimize for latency, cost, and governance across different phases of your product’s lifecycle.

A practical blueprint you can try now

1) Map your data flows and latency sensitivity

– Identify which RPC calls are most latency-sensitive for your users and which data sources need the strongest privacy guarantees.

– Question to consider: If an RPC goes down, what is the maximum acceptable outage window, and which user journeys would be affected most?

2) Establish a trusted core with a production-grade host

– Start with a core workload on a managed node service (e.g., a production Ethereum node on Google Cloud’s Blockchain Node Engine or AWS Managed Blockchain). Ensure SLA alignment, region strategy, TLS endpoints, and DDoS protection are in place.

– Implement robust credentials management (e.g., region-specific keys, secret rotation) and integrate with your existing security controls.

3) Layer in redundancy with decentralized data paths

– Add one or more decentralized RPC/data networks (e.g., SubQuery Network or The Graph-as-a-data-source) to fetch data through multiple operators. This reduces single points of failure and offers a path to regional resilience.

– Monitor latency across paths and compare cost vs. reliability across providers. Use traffic routing to prefer the fastest consistently available path during normal operation, with automatic failover during outages.

4) Introduce privacy options where needed

– For regulated data or user-facing privacy requirements, deploy zero-knowledge or confidential channels where feasible, and document data handling transparently for stakeholders.

5) Build cost controls and governance into the architecture

– Use pay-as-you-go or credits-based pricing with clear budgets, alerts, and caps. Establish guardrails so a traffic spike doesn’t derail your financial plan.

– Create a governance plan for provider selection, data-source routing, and reliability targets. Schedule regular reviews to adapt to changing conditions or new offerings.

6) Monitor, learn, and adapt

– Instrument reliability, latency, error budgets, and data consistency across paths. Treat the hosting architecture as a product you continuously improve.

– Document what works, what doesn’t, and why. Use those learnings to guide subsequent experiments and future phases.

Quick-start experiments you can run in the next sprint

- Experiment 1: Core+Decentralized Split

- Run production workloads on a core cloud node, and route a portion of traffic through a decentralized RPC path. Measure latency, success rate, and cost. Compare outcomes over a 2–4 week window.

- Experiment 2: Privacy-First Channel Pilot

- Implement a privacy-preserving channel for a subset of data flows and analyze performance impact and regulatory alignment.

- Experiment 3: Cost Runway and Scaling

- Model traffic growth with the pay-as-you-go/credits pricing, set up budget alerts, and test auto-scaling scenarios to observe impact on spend and latency.

A couple of mini case studies (illustrative, not exhaustive)

- Fintech API platform

- Core: Google Cloud Blockchain Node Engine for production Ethereum access with regional redundancy.

- Resilience: SubQuery RPCs for data indexing to diversify dependence on a single RPC provider.

- Privacy: Confidential computing options for sensitive transaction data in non-public channels.

- Outcome: Reduced ops toil, more predictable costs, while maintaining regulatory controls and improved data access resilience.

- Crypto wallet dApp with DeFi integrations

- Core: AWS or Google cloud nodes for core API surface.

- Data access: The Graph Sunrise-enabled data network to broaden data sources.

- Compute offloads: GPU compute grid (Render/io.net) for wallet-related analytics or on-device AI features.

- Outcome: Flexible compute and data access that scales with user demand while enabling experimentation with AI-powered features.

What I’d test next if I redesigned hosting from scratch (with today’s options)

- How do latency, reliability, and cost trade-offs shift when adding decentralized RPCs as a standard layer?

- Can privacy-preserving channels be deployed without unacceptable performance penalties for typical user journeys?

- What governance model would give us the most agility while satisfying compliance and risk controls?

- How can we instrument and compare provider performance across a 3–6 month horizon to decide where to invest more deeply?

If you’re designing a hosting layer that makes trust almost invisible to users, what would that layer look like—and what would you need to prove to stakeholders to get there? Could you build a strategy that feels seamless to users but is actually a carefully engineered blend of core cloud reliability, diversified data paths, and privacy safeguards?

Final reflections: a living, evolving posture

Hosting blockchain apps in 2025 isn’t about chasing the latest gadget; it’s about constructing a durable, adaptable stack. The major cloud players have lowered operational barriers, pricing is finally aligned with usage, and decentralized data networks provide compelling resilience options. The practical takeaway is simple to articulate but hard to execute well: design for your product’s data flows, latency needs, and governance constraints, then iterate. Start with a trusted core, layer in redundancy, and always keep costeffective controls in view as you scale.

What would you test first if you could redesign your hosting stack today, given today’s options? Which data path would you trust the most, and where would you leave room for experimentation? And above all, how would you explain your hosting choices to stakeholders in a way that makes trust feel natural, not manufactured?

If you’d like, I can tailor this blueprint to your product profile, including your target networks, regulatory requirements, and budget constraints. I can also sketch a concrete, month-by-month rollout plan with concrete KPIs so you can try this directly now.

References and context for further reading (for your exploration, not as a checklist):

– Google Cloud Blockchain Node Engine features and pricing: regional deployments, TLS endpoints, Cloud Armor, node-hour pricing. [Google Cloud]

– AWS Managed Blockchain capabilities for Hyperledger Fabric and Ethereum, with VPC and KMS integration. [AWS]

– Alchemy Pay As You Go pricing and multi-chain support. [Alchemy]

– Infura pricing and enterprise options with credits-based usage. [Infura]

– SubQuery Network for decentralized RPCs and indexing across ecosystems. [SubQuery]

– The Graph and Sunrise decentralization for permissionless data access. [The Graph]

– DePIN compute networks (Render Network, io.net) for GPU and AI workloads. [Render Network; io.net]

– Privacy and confidential computing discussions relevant to enterprise hosting. [ArXiv 2509.16390 and related sources]

– Regulatory developments and their indirect impact on hosting choices (US context and crypto banking pathways). [Reuters]

This is a living guide. It invites you to test, learn, and iterate—with the end goal not being a final answer, but a reliable approach you can evolve along with your product and audience.

What this journey adds up to

Last month’s heartbeat pause wasn’t a failure of code so much as a nudge toward a different way of thinking about hosting. The truth is not a single silver bullet but a continuum: core workloads anchored in trusted cloud nodes, complemented by diverse, resilient data paths, with privacy options dialed in where regulation or policy demand them. In 2025, the most durable architectures mix predictability with flexibility, tapping pay-as-you-go economics, decentralized RPCs, and DePIN compute as needed to balance latency, cost, and governance.

This is less about chasing the newest gadget and more about designing for your product’s real data flows and user journeys. It means treating hosting as a living choice, reviewed and revised as traffic, regulation, and team capabilities evolve. When you widen your lens, you see the same edge cases reframed: what happens if an RPC hiccups? which data must stay private? which paths give you the fastest, most robust experience without locking you in?

In practical terms, the right answer isn’t a fixed recipe; it’s a spectrum you continuously adjust. Fully managed nodes on mainstream clouds reduce ops toil, while decentralized RPCs and DePIN networks broaden resilience and reduce single points of failure. Privacy-preserving channels and confidential computing are not exotic add-ons but practical tools for regulated environments. And pricing models finally align with usage, inviting experimentation without the fear of sudden cost cliffs.

As you think through this, remember: the move toward trust in hosting isn’t only about technology—it’s about governance, transparency, and measurable reliability. Your architecture should tell a story to your team and to your users about where data lives, who controls it, and how it stays available when it matters most.

What I’m learning, and what you’ll likely notice in your own teams, is that the best path is a series of measured experiments. Start lean, quantify latency and cost, and iterate toward a topology that grows with your product—and your risk posture.

What you can do next (concrete steps)

- Map data flows and latency sensitivity

- Identify which RPC calls most affect user journeys and which data require strongest privacy guarantees.

-

Define an acceptable outage window for critical paths and plan safe fallbacks.

-

Establish a trusted core hosting base

- Choose a production-grade cloud node service (e.g., regional Ethereum nodes) with SLA alignment, TLS endpoints, and DDoS protection.

-

Implement region-specific credentials management and rotate secrets regularly.

-

Layer in redundancy with decentralized data paths

- Add one or two decentralized RPC/data networks to test data flow diversification.

-

Measure latency, reliability, and cost across paths; set automatic failover rules for the normal and degraded states.

-

Introduce privacy options where needed

- Deploy privacy-preserving channels or confidential computing where regulatory or business needs demand it.

-

Document data handling practices so stakeholders can see how privacy is being achieved in practice.

-

Build cost controls and governance into the architecture

- Use pay-as-you-go or credits-based pricing with budgets, alerts, and caps; establish guardrails to prevent runaway costs.

-

Create a governance plan for provider selection, data-source routing, and reliability targets; schedule regular review checkpoints.

-

Monitor, learn, and adapt

- Instrument reliability metrics, latency budgets, error rates, and data consistency across paths.

-

Maintain a living record of what works, what doesn’t, and why, to guide future iterations and onboarding.

-

Quick-start experiment ideas for your next sprint

- Core + Decentralized Split: route a portion of production traffic through a decentralized path and compare against the core cloud path over 2–4 weeks.

- Privacy Pilot: run a subset of flows through a privacy-preserving channel and evaluate impact on performance and compliance.

- Cost Runway: model traffic growth against pay-as-you-go credits pricing, set up alerts, and test auto-scaling scenarios.

Closing thought: what would you test first?

If you could redesign your hosting stack today with today’s options, where would you start? Would you prioritize latency, cost predictability, or regulatory compliance to begin with—and how would you prove to your stakeholders that your hosting choices are trustworthy and adaptable?

And if you could architect a hosting layer that makes trust feel almost invisible to users, what would that layer look like? What would you need to prove to leadership to make that vision real, and how would you measure its success in real-world use?

This is a living blueprint, not a final verdict. Build it, test it, and let the outcomes rewrite the next chapter of your product’s resilience.

If you’d like, I can tailor this approach to your product, networks, and budget, and sketch a concrete rollout plan with KPIs so you can start experimenting today.

Final takeaway

Hosting blockchain apps is a design problem that scales with your product. Start with a trusted core, add resilient data paths, and embed governance and privacy where they matter. Then iterate—because the best architecture isn’t the one you publish, but the one you continuously improve in service of your users.”