Hosting DApps – Centralized Cloud, Edge, and On-Chain—Where Should Your Code Call Home?

A few weeks ago, a friend pinged me from a hillside village with a shaky internet connection. His wallet timed out just long enough for the moment to pass, and his DApp never quite felt like a lived experience. In that moment I realized something obvious and stubborn: hosting a DApp isn’t just about code and contracts; it’s about where data travels, how quickly it travels, and who is invited to trust the path. So I started asking a simple question: where should a DApp live in 2025, when edge networks are thriving, when permanent storage is becoming cheaper and more accessible, and when users expect instant, browser-fast interactions no matter where they are?

A hook I can’t shake latency as trust

Latency isn’t merely a technical metric. It’s a form of trust. If a user reaches for your UI and waits, they reach for a fallback—any fallback. They click away, or they assume the app isn’t reliable. That intuition has become sharper as edge-first hosting matures, and as permanent storage options appear at scale. Cloudflare’s recent push toward full-stack apps on Workers—supporting popular front-end frameworks and even serverless APIs inside the edge—illustrates a real shift: you can ship front-ends, APIs, and stateful logic closer to users than ever before. If latency is trust, the edge is offering a new kind of credibility. Yet permanence, censorship resistance, and long-term durability are perched elsewhere on the stack—on-chain storage layers and distributed networks that outlive even the fastest CDN.

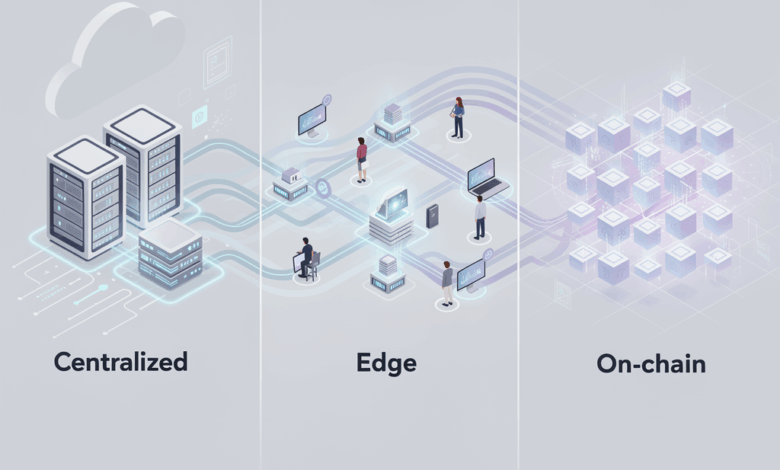

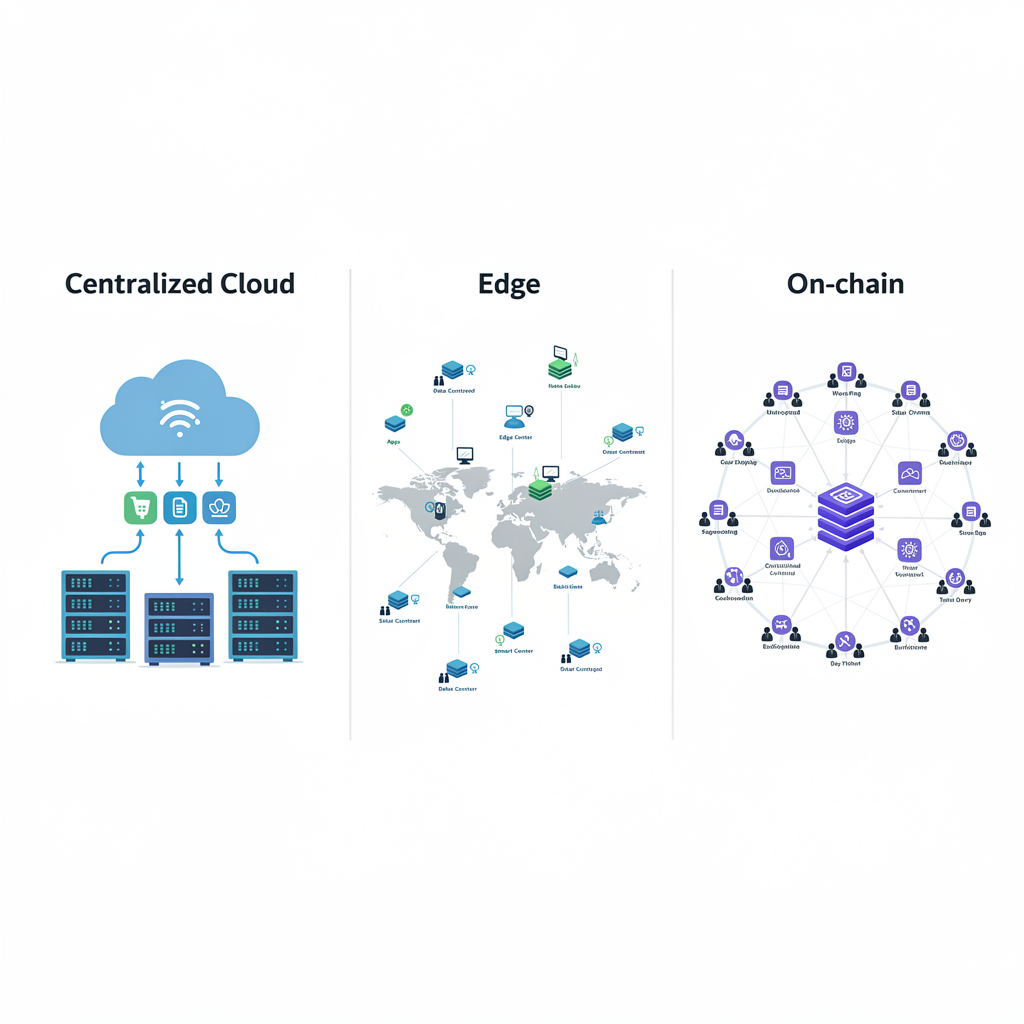

Problem/Situation Presentation three worlds, one choice

- Centralized Cloud (the familiar): It’s simple, predictable, and scale-tamable. A familiar dev workflow, robust tooling, and a single place to debug. When you deploy to a centralized cloud, you buy consistency, but you also braid your app to a single provider’s economics, regional availability, and policy shifts. For many teams, it’s the default path because it lowers the cognitive load and speeds initial iteration.

- Edge Hosting (the latency frontier): The edge promises dramatically lower round-trips by running code close to users. New “full-stack” stacks let you deploy front-end, API, and even small stateful parts at edge locations worldwide. The trade-off is architectural complexity, debugging across distributed locations, and keeping state synchronized if your app grows beyond tiny services. Still, the trend is undeniable: edge-native developers are shipping more than static assets; they’re shipping logic that reacts at the speed of thought for users everywhere. Recent industry moves show a broad embrace of edge-first patterns, with major players expanding native support for frameworks and deployments directly at the edge.

- On-Chain / Decentralized Storage (the memory of the web): If permanence, verifiability, and censorship resistance matter, on-chain data and decentralized storage are compelling. Arweave’s PermaWeb continues to scale long-term storage, while Filecoin and IPFS-driven approachesenable decoupled hosting where you don’t own the storage, but you own the data’s accessibility. Services like Bundlr simplify pushing data onto permanent storage networks, enabling practical use for metadata and assets alongside on-chain logic. The result is a hybrid reality: fast user experiences backed by an unlost memory that persists beyond any single provider.

This triad is no longer a theoretical concern. It’s a real toolkit, and your choice should be guided by what you’re optimizing for—latency, durability, cost, and risk tolerance.

Value of This Article a practical way to think about hosting DApps

What follows isn’t a verdict about one perfect stack. It’s a framework to help you reason in public, with your team and your users, about where to place each piece of your DApp. The landscape in 2025 features a maturing edge ecosystem, proactive hybrid architectures, and increasingly accessible permanent storage options. Cloud-native, edge-native, and on-chain-native patterns are no longer mutually exclusive; they’re ingredients in a recipe you assemble for each feature, lifecycle stage, and audience segment.

- Feasibility and confidence: you can start with a simple, centralized deployment for MVPs, then progressively move critical latency-sensitive components to the edge while maintaining core state in a robust, durable layer.

- Step-by-step preview: expect a staged approach—quantify latency budgets, map data flows, and decide which assets belong in which tier of hosting. You’ll learn how to segment data, how to cache, and how to validate durability with verifiable storage.

- Barriers, eased: by recognizing constraints early—costs, complexity, and vendor lock-in—you can design around them. The modern hosting stack is not a single platform; it’s a mesh of options you can orchestrate with awareness rather than guesswork.

A journey through contemporaries and tech tides

Edge-first hosting is no longer a novelty. Cloudflare’s latest platform updates reveal a world where you deploy front-end, APIs, and lightweight state right at the edge, with Global Availability baked in. That makes it practical to ship DApps whose first impression is instantaneous. Beyond that, the “supercloud” and multi-cloud-edge ecosystems are crystallizing, aiming to support Web3 workloads, cross-chain interactions, and AI-enabled experiences at the edge, with tokenomics and governance crafted to sustain a distributed compute fabric. Partnerships that connect AI agents with decentralized compute hint at a future where dynamic workloads roam across edge nodes under compliant, verifiable rules.

Meanwhile, IPFS and Filecoin-based approaches continue to evolve, decoupling content routing from storage so you don’t pin your content to a single gateway. Fleek and similar projects push this further toward permissionless gateways and decentralized bandwidth, paired with Arweave’s growing permanence guarantees. In practice, this means you can design a DApp whose assets are resilient to censorship and provider failures, while still delivering snappy user experiences through edge tech. And for data that must endure, Bundlr and similar tooling offer a practical path to push even large assets into permanent storage layers without breaking the bank.

What does this convergence mean for your architecture? It means you can mix and match with intent: latency for users, durability for critical data, and governance for your protocol. It invites you to imagine a spectrum rather than a fixed point—a spectrum where not every feature needs to be born on-chain, but every feature deserves a trusted, reliable home.

Is this really a choice, or a conversation we keep having?

I don’t pretend to have a final, universal answer. What I learn from watching teams experiment is that the best solutions are often hybrid: front-ends sit at the edge or in a fast CDN, critical state persists in a durable layer (maybe on-chain or in a decentralized store), and less critical logs or archives drift toward cheaper, scalable storage. The art is designing for the right balance: latency budgets that meet user expectations, and durability budgets that feel fair for your project’s risk tolerance and budget.

If you’re building a DApp today, consider this question with your team: which parts must survive a service disruption, and which can be rebuilt quickly? From there, you can decide where the data should live, who should serve it, and how to verify it later when someone asks, “Where did this data come from, again?”

A closing thought that invites your next experiment

As you prototype, I invite you to test a simple premise: deploy the user-facing parts at the edge for immediate responsiveness, while reserving the most critical, immutable data for a permanent layer. Then observe how your users react when they can trust that what they see today won’t vanish tomorrow. In the end, hosting a DApp is not just about architecture; it’s about shaping an experience that respects time, trust, and the memory of the web. What experiment will you run first to test this balance in your next release?

Latency as trust: where should a DApp live in 2025?

A friend once pinged me from a hillside village, his internet barely stable enough to keep a wallet open long enough to sign a transaction. In that moment I realized something obvious and stubborn: hosting a DApp isn’t just about code and contracts; it’s about where data travels, how quickly it travels, and who is invited to trust the path. So I started asking a simple question: where should a DApp live in 2025, when edge networks are thriving, when permanent storage becomes cheaper and more accessible, and when users expect browser-fast interactions no matter where they are?

Three hosting worlds, one practical question

Today you have a spectrum, not a single fixed point. Each world has its own rhythms, risks, and rewards.

Centralized Cloud familiarity with a hinge on cost and control

- Pros: predictable performance, robust tooling, unified debugging, and a familiar dev workflow. Deploying to a centralized cloud often feels like a safe first step for MVPs because it minimizes cognitive load and accelerates iteration.

- Cons: you braid your app to a provider’s economics, policy swings, and regional outages; vendor lock-in can bite when you scale or pivot.

- Practical takeaway: if latency budgets aren’t yet tight, start here and ship quickly. Then clearly map which pieces of your app would benefit from a different home as you grow.

Edge Hosting: the latency frontier

- Pros: dramatically lower round-trips by running code near users; new “full-stack” stacks let you deploy front-end, APIs, and lightweight state at edge locations worldwide. Cloudflare’s recent pushes show front-ends, APIs, and some state can live inside a Worker, bringing instant, browser-fast experiences close to users.

- Cons: architectural complexity grows; debugging across distributed edge locations is harder; state synchronization becomes a design concern as your app grows beyond tiny services.

- Practical takeaway: edge-native patterns are no longer experimental. If you care about responsiveness and user perception of speed, experiment with shipping critical surfaces to the edge first while keeping core state durable elsewhere.

On-Chain / Decentralized Storage memory that outlives a provider

- Pros: permanence, verifiability, and censorship resistance. Decentralized storage layers and on-chain memory offer a form of trust that outlives a single platform. Arweave’s PermaWeb, Filecoin/IPFS, and related tooling create a durable backbone for assets and metadata. Tools like Bundlr make pushing data into permanent storage more affordable and practical.

- Cons: latency can be higher for retrieval unless you architect caching or decoupled delivery, and your UX must gracefully handle retrieval variability. Complexity increases when mixing durable storage with mutable front-ends.

- Practical takeaway: treat permanent storage as the long-term memory of your DApp. Use it for critical data, provenance, and important assets that must persist beyond any single provider’s uptime or business model.

This triad isn’t a theoretical exercise. It’s a practical toolkit you assemble based on latency, durability, cost, and risk tolerance. The question isn’t which world is “best” in the abstract, but which piece of your app belongs where, and how to orchestrate them so the user experience remains coherent.

Why this matters now signals from the ecosystem

- Edge-first, full-stack at the edge: major players are shipping front-end, API, and even stateful logic at edge locations. For example, Cloudflare has been expanding GA support for popular frontend frameworks and enabling full-stack apps inside Workers, with static assets becoming a first-class path for edge delivery. This makes truly edge-hosted DApps more practical and production-ready than ever before.

- A growing “supercloud” and hybrid ecosystem: edge networks are moving toward ecosystem-scale compute and Web3 onboarding, with interoperable wallets, multi-chain pay-as-you-go economics, and governance that supports edge deployments at scale. Roadmaps and partnerships point to decentralized compute that roams across near-edge to edge nodes while still respecting security and compliance.

- Decoupled IPFS storage with durable backends: the trend is moving away from tying your assets to a single gateway. Projects are decoupling IPFS content routing from storage, enabling routing on top of Filecoin, Arweave, or other storage layers. This reduces vendor lock-in and increases censorship resistance while keeping access fast through edge-friendly gateways.

- Permanent storage with evolving tooling: on-chain-friendly storage stacks (Arweave, Bundlr) are maturing, enabling larger-scale, cheaper, and longer-lasting storage that pairs with conventional DApp logic. This reinforces the idea that you can design for both speed and durability by distributing responsibilities across the stack.

If latency is trust, the edge offers a new credibility layer. If permanence is memory, on-chain and decentralized storage offer a durable archive. The modern DApp architecture is not a single point of failure but a distributed orchestra where each instrument plays in its right place.

A practical framework for decision-making (in public, with your team)

- Start with a simple MVP on centralized cloud to validate the idea and establish a baseline UX. This minimizes upfront risk and accelerates learning.

- Map data flows and latency budgets. Decide which assets must be delivered with sub-100ms responses and which can tolerate a few extra milliseconds for guarantees of durability.

- Segment data by durability needs. Use the edge for the user-facing surfaces and lightweight APIs; reserve critical, immutable data for permanent storage layers (on-chain or decentralized storage).

- Build a staged migration plan. Begin by moving latency-sensitive components to the edge while maintaining core state and governance in durable layers. Monitor performance, error rates, and user sentiment as you migrate.

- Consider decoupled storage for assets and routing. IPFS/Filecoin or Arweave can store content with strong persistence guarantees, while edge networks deliver fast access and rich client experiences.

- Plan for governance and costs. As you scale, you’ll want to consider tokenomics, cross-chain interactions, and budgeting for edge compute vs. centralized compute, all while avoiding vendor lock-in.

This is not a recipe with a single ingredient list. It’s a balancing act: where you need speed, where you need verifiability, and where you need to survive unexpected outages or policy shifts.

Concrete actions you can take today (Try this directly now)

1) Instrument latency budgets for user-facing flows. Define acceptable round-trips for critical interactions and identify which components can be edge-hosted at a minimum viable scale.

2) Deploy a lightweight frontend and core API to an edge platform. For example, leverage edge-native stacks that support modern frameworks and GA-ready deployments at scale. This unlocks instant impressions for users regardless of geography.

3) Separate your data paths. Keep immutable or critical state in a durable layer (on-chain or decentralized storage) and place non-critical content and caches on edge or CDN infrastructure.

4) Experiment with IPFS/IPFS-based hosting plus decoupled storage. Use gateways that align with your latency and governance requirements, and evaluate Filecoin or Arweave as long-term archives for essential assets.

5) Explore Bundlr or similar tools to push data efficiently to permanent storage networks, reducing costs while improving availability.

6) Build observability around data provenance. When you retrieve data, can you verify it came from the expected source? Design your UX to communicate credibility without exposing users to opaque cryptography.

7) Create a rollback and failover plan. If a given hosting surface becomes unavailable, how will you gracefully degrade without losing trust? Your design should emphasize resilience and transparency.

These steps aren’t abstract; they’re actionable paths that align with current industry momentum toward edge computing, hybrid architectures, and durable storage. Cloud-native, edge-native, and on-chain-native patterns are no longer mutually exclusive—they’re ingredients you mix to fit feature by feature, lifecycle stage, and audience.

A closing thought what experiment will you run next?

Perhaps your next release will place the user-facing surface at the edge for immediate responsiveness, while the most critical data remains anchored in a permanent layer. Or maybe you’ll begin with a robust, centralized MVP and gradually distribute functions to the edge as you gain confidence and budget. The core question remains the same: what part of your DApp deserves sovereignty, and where does the memory of your web experience belong?

If you’re building today, I invite you to turn this into a practical experiment with your team: prototype an edge-first surface, connect it to a durable data layer, and observe how your users respond when what they see today can endure tomorrow. What would you test first to balance latency, durability, and governance in your next DApp release?

Latency as trust, memory as backbone: concluding thoughts on where a DApp should live

A few weeks ago, a friend messaged me from a hillside village where the internet wobbled like a candle flame. His wallet timed out just long enough for a signature to slip away, and suddenly the idea of a perfectly coded DApp felt almost petty next to the fragility of connection. That moment didn’t just teach me about latency; it reminded me that hosting is a question of trust, not just infrastructure. If latency is trust, then the edge is the credibility you earn in the moment—and permanence is the memory you hand back to your users long after the moment has passed. With edge, cloud, and on-chain storage each playing a distinct role, the modern DApp becomes a choreography of surfaces and memories, each chosen for what it preserves and what it delivers now.

Key Summary and Implications

Hosting a DApp today isn’t a single decision, it’s a spectrum. Edge hosting can deliver browser-fast experiences by bringing logic closer to users, while durable, long-term data lives in on-chain or decentralized storage. Centralized cloud remains a practical launchpad for MVPs and rapid iteration, but it trades off some long-term sovereignty for simplicity. The broader implication is clear: design per feature, not per layer. Your architecture should be a living map that assigns each piece of data, each user interaction, to the home that best serves its durability, latency, and governance needs.

What follows isn’t a universal verdict but a framework for public, collaborative decision-making with your team and your users. You’ll learn to balance latency budgets with durability budgets, segment data by its need for permanence, and orchestrate a hybrid stack that can weather outages, policy shifts, and evolving user expectations. In practice, this means moving things that benefit most from immediacy toward the edge, while anchoring critical, immutable data in a memory that outlives any single provider.

From a broader view, this approach invites a spectrum mindset: you don’t pick one home for all data. You curate a portfolio of homes—edge for immediacy, durable storage for memory, cloud for expedience—then choreograph them with clear data paths, governance rules, and fallback strategies. The trend toward edge-native stacks, coupled with stronger, cheaper permanent storage options, makes this hybrid architecture not just possible but prudent for real-world DApps in 2025 and beyond.

Action Plans

1) Start small with a centralized MVP to validate the idea and establish a baseline user experience. This minimizes upfront risk and accelerates learning.

2) Define latency budgets for user-facing flows and identify which components can confidently live at the edge without compromising correctness.

3) Build a staged migration plan: move latency-sensitive surfaces to the edge first, while keeping core state and governance in a durable layer (on-chain or decentralized storage).

4) Architect data paths with clear segmentation: immutable or critical state in a permanent layer, while non-critical content and caches live at the edge or CDN, enabling fast iterations without risking durability.

5) Experiment with decoupled storage approaches (IPFS/Filecoin or Arweave) for assets and metadata, paired with edge delivery to maintain snappy UX.

6) Explore Bundlr or similar tooling to push data efficiently to permanent storage networks, balancing cost and resilience.

7) Implement observability for data provenance: ensure users can verify where data came from, and communicate credibility clearly in the UX.

8) Develop a rollback and failover plan: design graceful degradation that preserves trust even if a hosting surface becomes unavailable.

9) Regularly review governance and cost considerations as you scale, including cross-chain interactions and edge compute economics, to avoid hidden vendor lock-in.

These steps aren’t vanity metrics; they’re actionable moves that reflect today’s momentum toward edge-first delivery, hybrid architectures, and durable storage. They help you build a DApp that feels fast when it matters and trustworthy when it matters most.

Closing Message

The future of hosting a DApp isn’t about finding a single perfect layer; it’s about composing a resilient, believable experience. Place the user-facing surfaces at the edge for immediacy; anchor critical memories in durable layers that refuse to vanish with a provider’s uptime. Design with transparency and rollback in mind, so users feel they’re not just interacting with software, but with a trustworthy system that respects time, memory, and governance.

What experiment will you run first to test this balance in your next release? Perhaps you’ll begin with an edge-first surface, connected to a durable data layer, and watch how users respond to the assurance that what they see today can endure tomorrow. Or you might start with a centralized MVP and a clear migration plan, validating the economics before you distribute surfaces. Whatever you choose, the invitation remains the same: prototype, measure, and decide—together with your users—where each piece of your DApp should live.

If this resonates, start small, plan boldly, and keep the user at the center of your map. The architecture isn’t just a blueprint; it’s a commitment to speed, memory, and trust across every corner of the web.